Your AI Agent Gave the Wrong Answer. Now What?

Synalinks Team

Your AI Agent Gave the Wrong Answer. Now What?

Picture this. Your support agent tells a customer they're eligible for a refund, but they're not. Your analytics pipeline flags a revenue spike, but it's a data join gone wrong. Your internal assistant cites a policy that was updated six months ago.

These aren't hypotheticals. They're the daily reality of teams running AI in production. The agent sounds confident. The output looks right. But somewhere between retrieval and response, something slipped.

This is the hallucination problem. And it's the reason we built Synalinks.

Why RAG Fails: The Gap in Today's AI Agent Stack

Most production AI systems follow a familiar pattern: take a user query, retrieve relevant documents with a vector search, and hand everything to a language model. This is RAG (Retrieval-Augmented Generation), and it's a genuine improvement over prompting alone.

But RAG has a structural weakness. The language model still has to interpret the retrieved documents. It has to decide what's relevant, reconcile conflicting information, and reason over relationships it was never explicitly taught. That's where things break.

The issue isn't retrieval. It's reasoning.

Vector databases are excellent at finding similar content. But similarity isn't understanding. When your agent needs to answer "Which customers on the Enterprise plan haven't renewed in 90 days and have open support tickets rated critical?", that's not a similarity search. That's multi-step reasoning over structured relationships, with temporal constraints.

No amount of prompt engineering fixes this. You need a different layer.

How Structured Knowledge Gives AI Agents Deterministic Answers

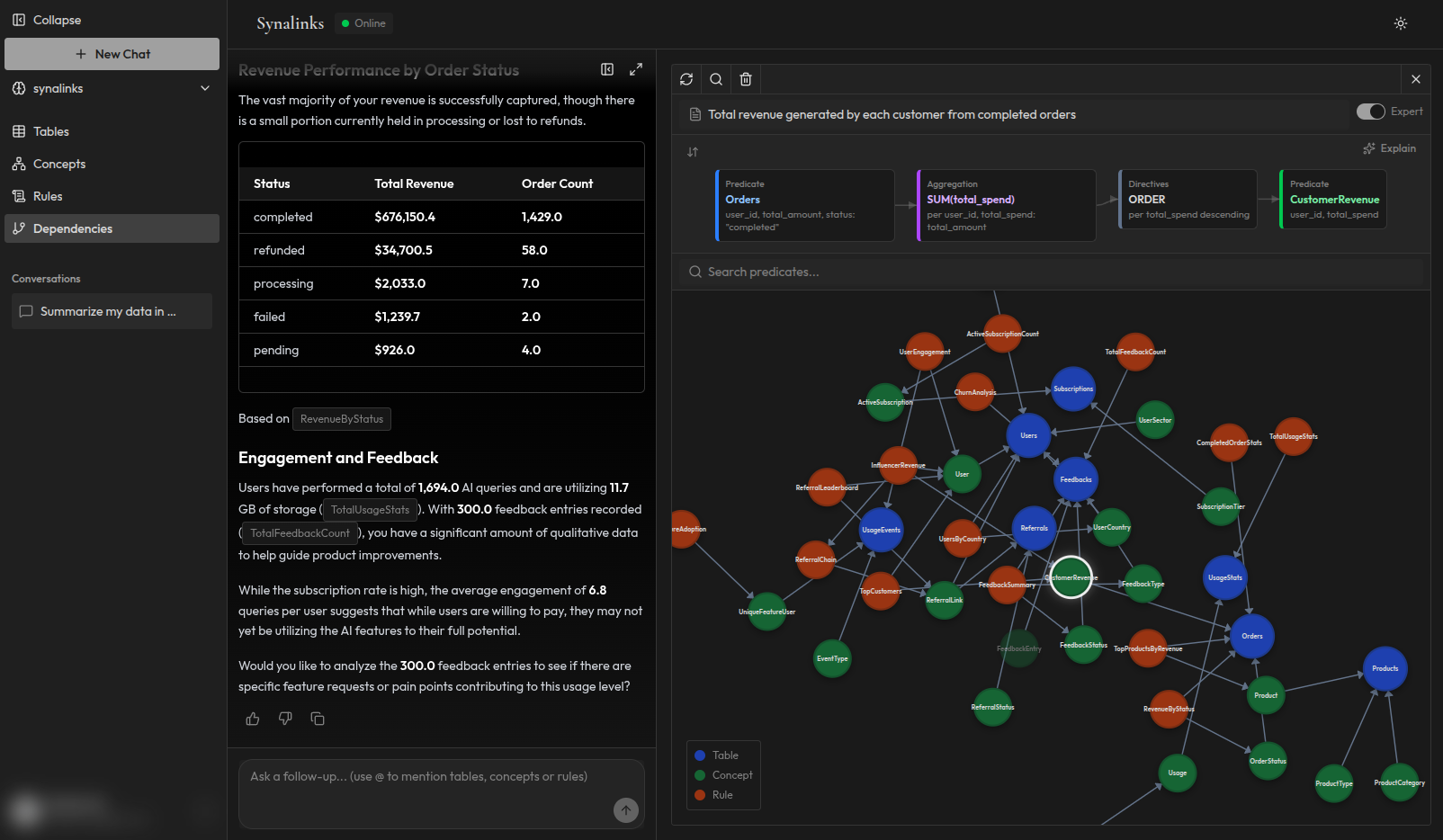

Synalinks Memory is that layer. Instead of feeding raw documents to a language model and hoping for the best, Synalinks takes a fundamentally different approach:

-

Knowledge is structured before it's used. Your data, from databases, spreadsheets, files, or APIs, is automatically organized into verified concepts, relationships, and rules. Not embeddings. Not chunks. Actual structured knowledge.

-

Reasoning is deterministic. When your agent queries Synalinks Memory, it doesn't "generate" an answer. It derives one, through rule-based reasoning over your knowledge graph. Same question, same data, same answer, every single time.

-

Every answer is traceable. Each result comes with a complete reasoning chain. You can see exactly which rules fired, which data points were used, and why the system reached its conclusion. Nothing is a black box.

This isn't a minor improvement. It's a different paradigm. Your AI agent goes from "probably right" to "provably right."

AI Agent Hallucination vs Deterministic Reasoning in Practice

Say you're building an AI agent for a logistics company. The agent needs to answer questions like:

- "Which shipments from Supplier A are overdue by more than 48 hours?"

- "What's the average lead time for components sourced from the EU in Q4?"

- "Flag any orders where the delivery commitment conflicts with current warehouse capacity."

With a traditional RAG setup, you'd embed your shipping records, feed them to a model, and cross your fingers. Maybe it gets the date math right. Maybe it doesn't confuse "shipped" with "delivered." Maybe.

With Synalinks Memory, the system knows what "overdue" means in your domain, because you defined the rule. It knows the relationship between suppliers, shipments, and delivery commitments, because it extracted and verified that structure from your data. The reasoning follows the rules. The answer is deterministic. And if the data doesn't support a conclusion, the system tells you instead of guessing.

Why we're writing this blog

We're launching this blog because we believe the industry is at an inflection point. AI agents are moving from demos to production, and the gap between "impressive prototype" and "reliable system" is exactly the problem we solve.

Here's what you'll find in upcoming posts:

- Architecture deep-dives. How deterministic reasoning over knowledge graphs actually works, and why it's different from symbolic AI approaches you may have seen before.

- Integration guides. Practical walkthroughs for connecting Synalinks Memory to your existing stack.

- Production patterns. Lessons from teams running AI agents with structured knowledge at scale.

- Research notes. Our thinking on the future of neuro-symbolic AI and where the field is heading.

We'll keep things concrete, technical, and honest. No hype. No hand-waving. Just the real problems and the engineering behind the solutions. Start with our guide on how to build an AI agent that never hallucinates.

Try it yourself

Synalinks Memory is available today with a free tier, no credit card, no sales call. Connect a data source, describe your domain, and see deterministic reasoning in action within minutes.

If you have questions, feedback, or a use case you'd like to discuss, reach out to us directly. We read every message.