GraphRAG vs Synalinks: Retrieval is Not Reasoning

Synalinks Team

GraphRAG vs Synalinks: Retrieval is Not Reasoning

GraphRAG has become the go-to answer when teams realize that plain vector RAG isn't enough. The idea is simple: use a language model to extract entities and relationships from your documents, build a graph, and then retrieve from that graph instead of (or alongside) a vector store.

It's a real step forward. But it still carries two fundamental limitations that most teams don't notice until they hit production: GraphRAG depends on LLMs for extraction, and it still only retrieves. It doesn't reason.

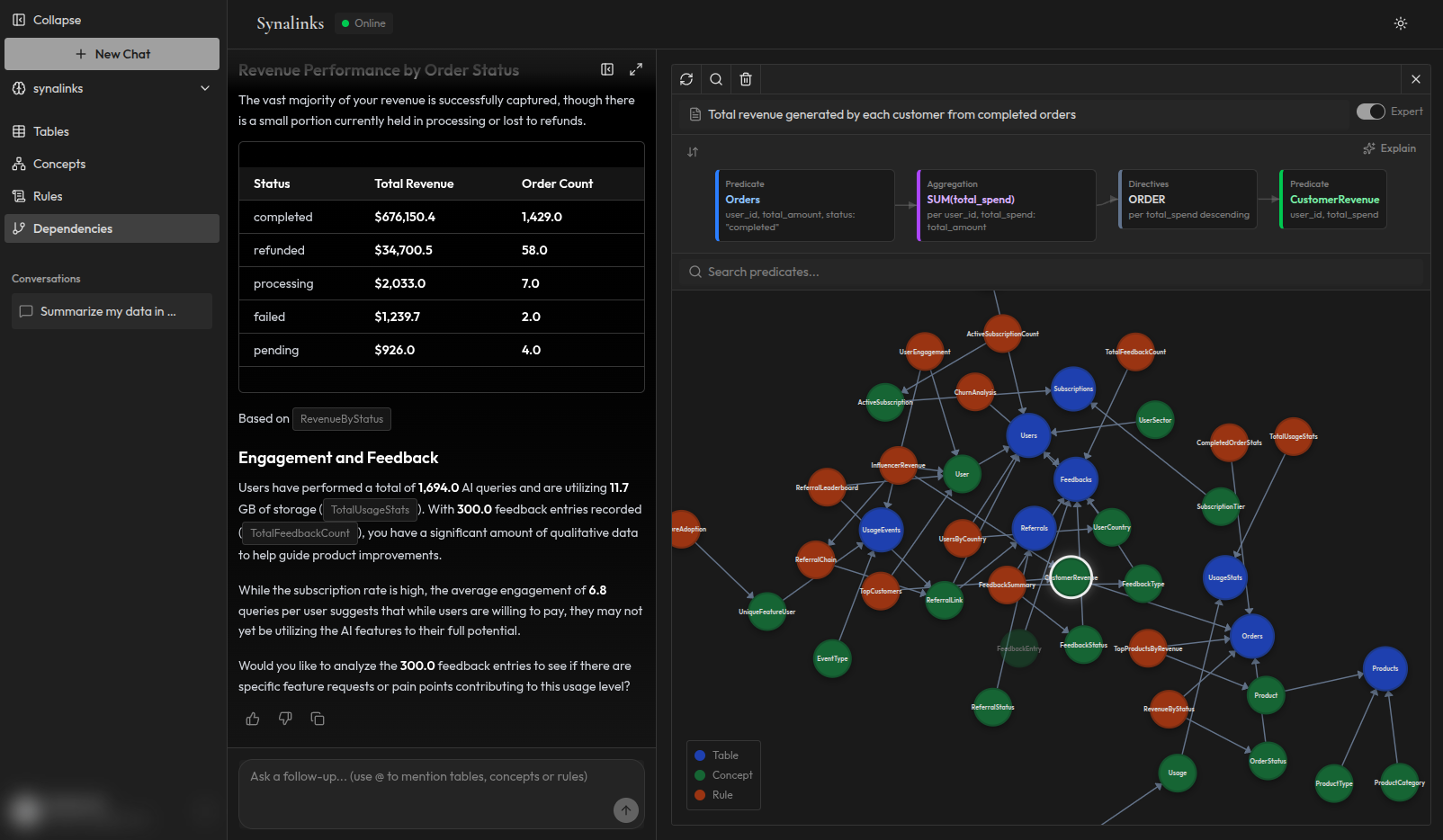

Synalinks Memory takes a completely different approach. No embeddings. No LLM-based extraction. Knowledge graphs are built from your semi-structured data using pure logic, instantly and at zero cost. And when a question comes in, the system reasons over the graph instead of just fetching relevant nodes.

Let's break down what this means in practice.

How GraphRAG builds its knowledge graph

GraphRAG, as popularized by Microsoft Research, works like this:

- Extraction: A language model reads your documents and identifies entities (people, companies, concepts) and the relationships between them

- Graph construction: The extracted triplets (entity-relationship-entity) are assembled into a graph. Communities of related nodes are detected and summarized

- Retrieval: When a question arrives, the system finds relevant parts of the graph and retrieves the associated summaries or subgraphs

- Generation: A language model uses the retrieved graph context to generate an answer

This works better than vanilla RAG for questions that span multiple documents or require connecting information across topics. The graph structure helps surface connections that vector similarity alone would miss.

But there are real costs.

The problems with LLM-based extraction

It's expensive

Every document you add to the system needs to be processed by a language model. For a large corpus, this means thousands of LLM calls just to build the initial graph. And every time your data changes, you need to re-extract. The cost scales linearly with your data volume, and it adds up fast.

It's slow

Extraction isn't instant. Processing a few hundred documents can take minutes to hours depending on the model and the complexity of the text. If your data updates frequently, you're always running behind.

It's lossy

The LLM decides what counts as an entity and what counts as a relationship. It makes judgment calls about what's important and what to ignore. These judgment calls are probabilistic. Run the same extraction twice and you can get slightly different graphs. Important relationships might be missed entirely if the model doesn't recognize them as significant.

It works best on unstructured text

GraphRAG was designed to extract structure from documents, PDFs, articles, reports. But a huge portion of business data is already semi-structured: databases, spreadsheets, CSV files. Running an LLM over a SQL table to "discover" that customers have orders is like using a telescope to read a book that's right in front of you. The structure is already there.

How Synalinks builds its knowledge graph

Synalinks Memory takes a fundamentally different path:

- Connect your data: Point Synalinks at your databases, spreadsheets, or CSV files

- Logic-based extraction: The system reads the structure of your data and builds a knowledge graph from it. No LLM calls. No embeddings. Pure logic

- Instant graph: The knowledge graph is available immediately. No waiting for extraction pipelines to finish

- Reasoning: When a question arrives, the reasoning engine applies defined rules to the graph and derives an answer. It doesn't retrieve and hand off to an LLM. It reasons

No embeddings anywhere in the pipeline. No LLM calls during extraction. The knowledge graph is built from the actual structure and content of your data, not from a model's interpretation of it.

Zero-cost extraction changes everything

When extraction is free and instant, something important happens: you can change your mind.

With GraphRAG, changing your graph model is expensive. If you decide you need different entities or relationships, you have to re-extract everything. New LLM calls, new costs, new waiting. So teams tend to commit to a schema early and stick with it, even when their needs evolve.

With Synalinks, you can restructure your knowledge graph as often as you need. Want to model your data differently to answer a new category of questions? Just update the model and the graph rebuilds instantly from the same source data. No additional cost, no waiting.

This makes Synalinks ideal for teams that are still figuring out what questions they need their agents to answer. You can explore, iterate, and refine your knowledge model without worrying about burning through LLM credits every time you make a change.

Retrieval vs reasoning: the real gap

Here's the fundamental difference that matters most.

GraphRAG retrieves. It finds relevant parts of the graph and hands them to a language model to interpret. The LLM still has to figure out the answer from the retrieved context. It's better context than what vanilla RAG provides, but the final step is still probabilistic generation.

Synalinks reasons. When you ask a question, the reasoning engine traverses the graph, applies defined rules, and derives an answer. The language model's job is limited to formatting the result in natural language. It doesn't interpret, infer, or generate the substance of the answer.

Here's what that looks like in practice:

Question: "Which customers on the Enterprise plan haven't logged in for 90 days and have renewals this quarter?"

GraphRAG approach: The system finds graph nodes related to "Enterprise plan", "login activity", and "renewals". It retrieves these subgraphs and passes them to the LLM. The model reads the context and tries to connect the dots. If some login dates are missing from the retrieved nodes, the model might still produce an answer based on what it has, or it might miss customers entirely.

Synalinks approach: The reasoning engine queries the customer entities directly. It checks the plan property, compares last_login against today minus 90 days, and checks renewal_date against the current quarter. The rules are explicit. The answer is derived. If data is missing for a customer, that customer is flagged as incomplete, not silently skipped.

The difference: one approach hopes the LLM interprets the retrieved context correctly. The other one gives you a provable answer with a full reasoning chain.

When GraphRAG makes sense

GraphRAG is a good choice when:

- Your data is primarily unstructured text (research papers, long documents, reports) and you need to extract structure from prose

- You don't need deterministic answers. Approximate, "good enough" retrieval is acceptable

- Your graph model is stable. You've settled on what entities and relationships matter and don't expect to change it often

- You're extending an existing RAG pipeline and want better multi-document reasoning without a full architecture change

When Synalinks makes more sense

Synalinks Memory is the better fit when:

- Your data is already semi-structured. Tables, databases, spreadsheets, files. The structure exists, you don't need an LLM to guess at it

- You need real reasoning, not just better retrieval. Questions involve rules, conditions, temporal comparisons, and multi-hop logic

- Your domain model evolves. You need to restructure your graph as your understanding of the problem changes, without paying extraction costs every time

- Determinism matters. Same question, same data, same answer. Every time. With a full trace showing how the answer was derived

- Cost and speed matter. Zero-cost extraction means you can scale your knowledge graph without worrying about LLM bills

Side-by-side comparison

| GraphRAG | Synalinks Memory | |

|---|---|---|

| Extraction method | LLM-based (probabilistic) | Logic-based (deterministic) |

| Uses embeddings | Yes | No |

| Extraction cost | LLM calls per document | Zero |

| Extraction speed | Minutes to hours | Instant |

| Best data type | Unstructured text | Semi-structured (tables, DBs, files) |

| Graph model changes | Re-extract everything (costly) | Rebuild instantly (free) |

| Query mechanism | Retrieval + LLM generation | Rule-based reasoning |

| Answer determinism | No (probabilistic) | Yes (deterministic) |

| Reasoning chains | No | Yes, full trace |

| Handles missing data | Silently skips or hallucinates | Explicitly flags incomplete data |

The bottom line

GraphRAG is a meaningful improvement over vanilla RAG. If you're working with large collections of unstructured documents and need to surface connections between them, it's a solid tool.

But if your data already has structure, and most business data does, you're paying an unnecessary tax by running it through an LLM just to extract what's already there. And you're still left without real reasoning at query time.

Synalinks Memory gives you instant, zero-cost knowledge graph extraction from your actual data, and a reasoning engine that derives answers instead of retrieving them. When your domain model changes, you can rebuild in seconds. When a question comes in, you get a provable answer, not a probabilistic guess.